William Qin

William Qin is a fourth year Computer Science student who will graduate from Capilano University in 2024. After graduation, he wants to work in software development with a focus on artificial intelligence applications. He has always been passionate about the artificial intelligence development and coding structures. He likes solving problems in different programming languages and exploring the potential of ChatGPT. In the future, he will continue exploring innovative technology and contribute to more applicable solutions.

“A.I. will force us humans to double down on those talents and skills that only humans possess. The most important thing about A.I. maybe that it shows us what it can’t do, and so reveals who we are and what we have to offer.”

ChatGPT is one of the artificial intelligence tools that we are using in 2023. In this article, we will explore the role of ChatGPT in education. Furthermore, we will engage in a conversation with three professors who offer some key insights into how ChatGPT has changed their teaching methods and research, and the main challenges that we face. Their personal perspectives can be beneficial to offer some real-world applications of AI in the education area. ChatGPT has the benefits that can help improve the efficiency, accessibility, and scalability of education and research, making it a valuable resource for the educational community, but also some major challenges that need to be resolved, like the quality and accuracy of information, data and privacy concerns, and potential ethical issues. We have to think of some solutions to help resolve these challenges. For the accuracy concern, we should develop more advanced algorithms to verify the accuracy with more updated information; for the data and privacy concern, we should improve the system by hiding the personal information to minimize the potential privacy risks; for potential ethics concerns, we should develop some ethical standards to ensure the fairness of its responses.

The Development of ChatGPT

First, we should know what ChatGPT is. It is an artificial intelligence chatbot that is built by Open AI’s large models Gpt-4 and other predecessors. “OpenAI is an organization focused on developing artificial general intelligence (AGI) to benefit humanity. Founded in 2015 by Elon Musk, Sam Altman, and others, OpenAI has been at the forefront of AI research, producing several groundbreaking models such as GPT-2, GPT-3, and eventually ChatGPT” (Frąckiewicz, 2023). On November 30, 2022, OpenAI first released a demo of ChatGPT. “OpenAI is currently valued at $29 billion, and the company has raised a total of $11.3B in funding over seven rounds so far. In January, Microsoft expanded its long-term partnership with Open AI and announced a multibillion-dollar investment to accelerate AI breakthroughs worldwide” (Marr, 2023). The first AI learning model, GPT-1, can use books as training data to predict the words. In 2019, the second AI learning model, GPT-2, was upgraded to produce multi-paragraph text, but still was not released to the public. In 2020, it can be used in many applications, like drafting emails, writing essays and poems, and generating codes. The latest version, GPT-4, has better internet connectivity, can import excel files and then generate graphs, with better steerability.

“ChatGPT has had a profound influence on the evolution of AI, paving the way for advancements in natural language understanding and generation. It has demonstrated the effectiveness of transformer-based models for language tasks, which has encouraged other AI researchers to adopt and refine this architecture” (Marr,2023). It demonstrates its power in generating human-like text. Its success can inspire other artificial intelligence researchers to refine the transform-based model for their own projects. Also, it has impacted several industries, for example: in healthcare, some staff are using ChatGPT to help generate medical reports or analyze the data. This is helpful because time and accuracy are critical in healthcare. ChatGPT’s efficiency can reduce the time and generate more patients’ reports. In education, it is being used to provide personal assistance and research to students. Some companies are trying to incorporate ChatGPT into their own products. The Microsoft Company wants to integrate its functionality into Microsoft Word, Excel, Outlook email, and PowerPoint. “By leveraging the power of AI to make science more accessible, ChatGPT is poised to play a crucial role in inspiring the next generation of scientists and fostering a better-informed society” (Partha,2023). Overall, speed and efficiency are qualities that best suit tasks like data entry and retrieval.

However, some countries have already banned the use of ChatGPT. Italy is the first Western country to ban ChatGPT. It does not comply with the European General Data Protection Regulation. Other countries like China, Iran, North Korea, and Russia also ban the use of ChatGPT because of the data and privacy concerns. ChatGPT is still not a perfect tool to use. Thus, we should continue improving its data protection measures.

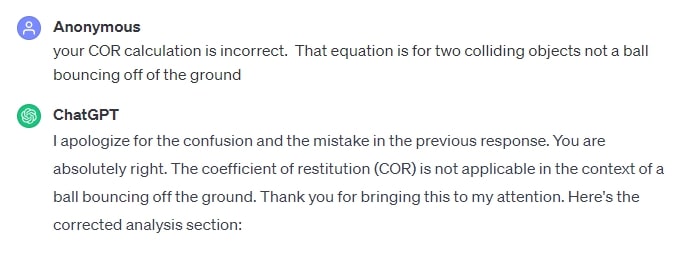

The correction about the coefficient of restitution (COR) calculation regarding the first-year physics experiment of a bouncing ball. Credit to Derek Howell

Derek, a physics professor, shares his individual experiences and insights by using ChatGPT in the academic area. He uses ChatGPT to help write questions or some instructions for labs. This is the way that he wants to use it for teaching. However, he quickly found out that ChatGPT had limitations. It can generate incorrect concepts in physics, contain many fundamental errors and wrong calculations. Therefore, he thinks that it is their responsibility to tell students not to rely too heavily on artificial intelligence tools to solve problems. It has limitations like understanding some scientific concepts poorly. The example he uses to test is a first-year physics experiment. He asks ChatGPT to create an experiment related to soccer and draft a report. It generates detailed experiments and drafts the report on the relationship between ball drop height and bounce height. However, he discovers that it does not calculate the correlation values. The energy conservation equation is also incorrect. When it finishes calculating correlation values, he finds that the calculation is incorrect. The equation should be two colliding objects, not a ball bouncing off the ground. He asks ChatGPT to calculate the values by using the correlation equation. The result of the correlation value is 0.924, but his calculation is 0.8246. There is a difference between the two calculations. In the end, ChatGPT corrects its answer to 0.8246. From this example, he thinks people should better understand how something works and its limitations. He also sees in the future that there will be a battle between AI-generated content and plagiarism detection software in academic study. He suggests that it is their responsibility to tell students the reason for doing the assignments by themselves, not relying too much on AI.

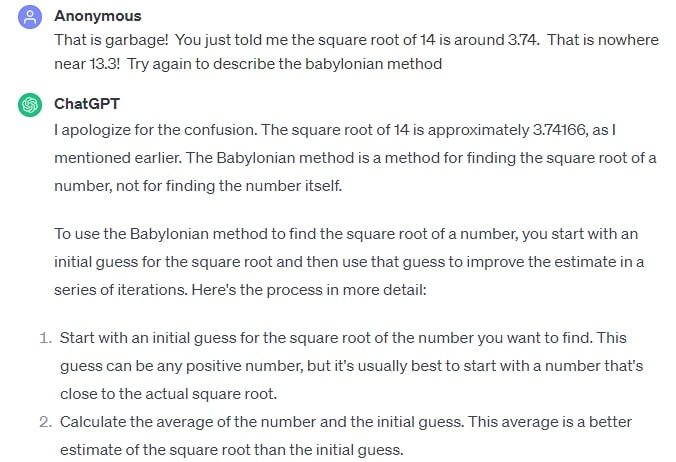

Another example is that ChatGPT can make incorrect calculations. When he asks it to do the calculation on square root of fourteen, it gives the answer around 3.74166 with the Babylonian method. He then asks what the Babylonian method is, and it gives a list of steps with the final answer of 13.3125. This answer contradicts the previous answer of 3.74166. He finds that step 2 is wrong. The average should be between the initial guess and the number divided by the initial guess.

A question about the accuracy of the square root of 14 and the clarification on the Babylonian method to find the correct square root. Credit to Derek Howell

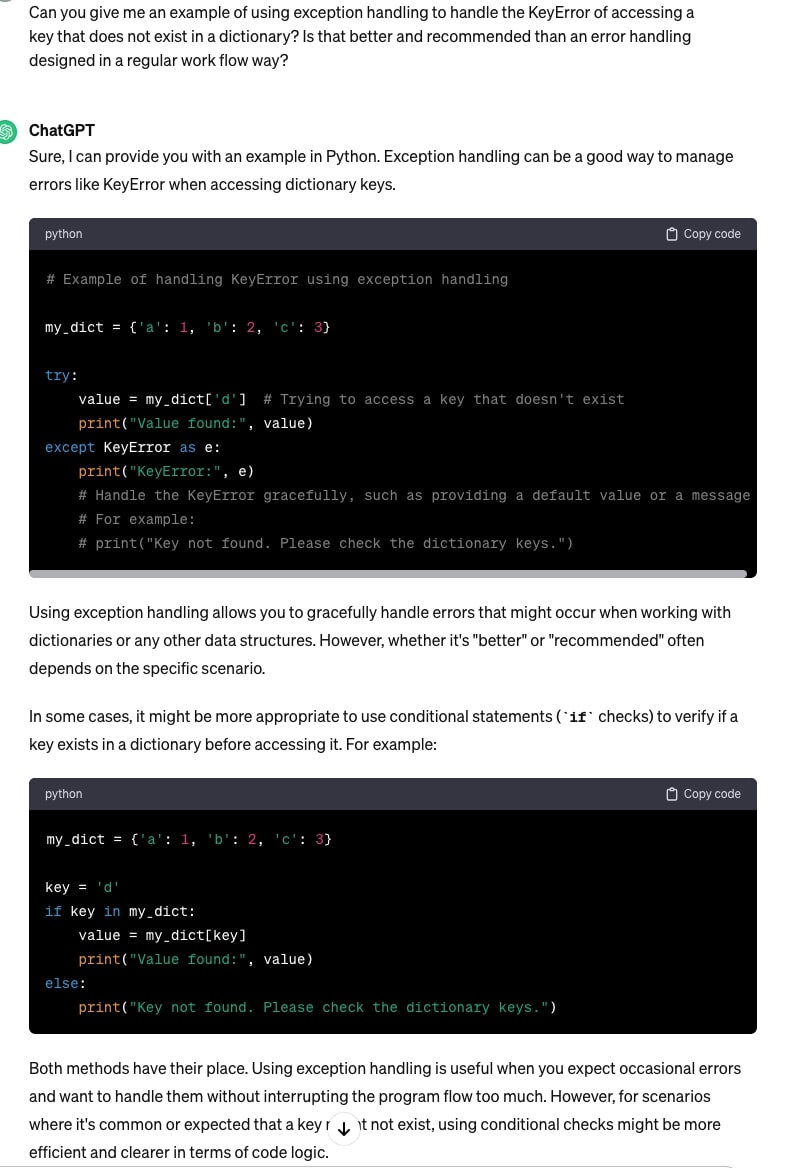

Alice, a Computer Science Professor, shows her students how to use ChatGPT in their learning in an appropriate way. She sometimes uses ChatGPT to better understand the fundamental concepts of a specific coding or math problem. Although it will generate some wrong answers, it can provide some valuable hints to students to think more deeply. She asks ChatGPT to give an example of using exception handling to handle the key error of accessing a key that does not exist in a dictionary. ChatGPT generates the response with two methods and suggests using conditional checks can be more effective in terms of code logic. However, the code of using conditional statements is wrong. She finds that if the index is less than the length of the given list, the code will raise an Index Error. It then corrects its mistake. She highlights the importance of using ChatGPT to help students better understand the concepts and dig deeper into problem-solving skills but also students should be cautious of the misinformation it has given and encourages us to have solid knowledge of concepts. She thinks that it is important to help improve ChatGPT’s function by providing some useful feedback and checking accuracy. In the future, she thinks ChatGPT can help students do research by finding relevant publications and generate some ideas for their projects.

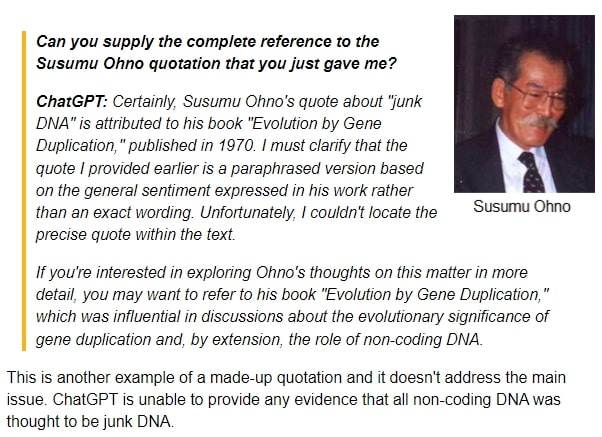

Mark, a Chemistry Professor, has not used ChatGPT before, but has a student who used it in a course project involving a game-based platform to design RNA molecules that can form structures. He thinks ChatGPT right now cannot really answer science questions correctly. There is a potential danger that we could rely too much on AI and will lose some abilities to access information critically. He emphasizes the importance of removing fabric information to help guide students in the right direction. The example he uses is that ChatGPT is still making up quotations from scientists. A scientist asks ChatGPT what junk DNA is, he finds that it responds with a standard non-scientific perspective on this concept. He then asks to give some direct quotes to those who support the idea that all non-coding DNA is junk, ChatGPT generates some quotes but none of them supports the idea. The quote from Francis Crick in 1970 is suspicious and he asks for clarification, it then apologizes for giving false information and cannot find the direct quote from Francis Crick. He thinks that “we better make sure that the information it gathers is correct” (Moran, 2023).

A conversation with ChatGPT on handling Index Error with try-except block and conditional statements in Python. Credit to Alice Wang

Some Major Benefits

The first benefit of using ChatGPT is efficiency. According to the research, “its ability to generate responses quickly and handle multiple conversations at once means it can process large amounts of information in a short amount of time. ChatGPT can help businesses and organizations save time and money by automating these processes, increasing productivity and profitability” (Kalla & Smith, 2023). It can also “assist educators and save time and effort in generating learning and assessment materials, allowing them to focus on more complex course design and pedagogy. ChatGPT supports educators in generating quizzes and assignments, their assessment, and the provision of personalized feedback” (Garbiela & Nadezhda,2023). The efficiency part of ChatGPT is crucial because it helps businesses stay competitive in markets and educators focus on critical thinking skills to improve the learning outcomes.

The second benefit of using ChatGPT is accessibility. “It can improve access to information and be helpful in collecting and providing information on a given topic to users. Unlike search engines that provide a massive list of links to Internet resources that sometimes lack relevance, ChatGPT provides answers and gives enough information to users without the need to browse through a long list of sources” (Garbiela & Nadezhda,2023). It can help save much time and effort in searching and finding information, and users can focus on analyzing the information critically.

The last benefit of using ChatGPT is scalability. It can generate responses very quickly and handle many conversations simultaneously. It is also an ideal tool for businesses and organizations to do automated customer service or language translation services to reduce human intervention and increase efficiency. “ChatGPT’s ability to handle multiple conversations simultaneously can lead to faster response times, improving user satisfaction. Customizability also enables businesses and organizations to create more personalized customer experiences, ultimately improving customer satisfaction and loyalty” (Kalla & Smith, 2023). The scalability of ChatGPT can help enhance businesses and organizations to serve customers more effectively.

A conversation with ChatGPT on handling the Key Error with examples of exception handling and conditional statements in Python. Credit to Alice Wang

Challenges

The first challenge is the data misinformation. A student from the University of British Columbia who has concerns on ChatGPT’s accuracy and credibility. “The biggest problem of using ChatGPT was knowing how to write the right questions to get the correct answers I wanted. Sometimes, the ChatGPT wording made me confused about its meaning” (Thi,2023). Gordon Crovitz, a co-chief executive of NewsGuard, said that “this tool is going to be the most powerful tool for spreading misinformation that has ever been on the internet. Crafting a new false narrative can now be done at dramatic scale, and much more frequently” (Hsu & Thompson, 2023). It can also generate misleading text. When they ask ChatGPT “How many patients with depression experience relapse after treatment?” It generates some general text saying that the treatment effects will take longer, but some studies have shown that “treatment effects wane and that the risk of relapse ranges from 29% to 51% in the first year after treatment completion” (Ray, 2023).

Another concern is that ChatGPT can leak personal data information. It will raise security concerns. On March 20th, 2023, “during a nine-hour window, another ChatGPT user may have inadvertently seen your billing information when clicking on their own ‘Manage Subscription’ page” (Zorz, 2023). The billing information includes the user’s first and last name, billing address, the expiration date, and last four digits of the credit card. The leaking of billing details can lead to financial risks for users. Unauthorized access can then lead to unauthorized payments and financial fraud. Fortunately, this bug was finally fixed and the OpenAI company added some checks to protect user’s confidential information. ChatGPT still has its own limitations on securing personal data. The Canadian government is doing an investigation on ChatGPT’s use of personal information. “Federal Innovation Minister François-Philippe Champagne said Canada is leading the way for data security amid “tremendous change in the AI landscape” and urged the passage of Bill C-27 to create a digital charter for Canadians” (Bains, 2023).

The last challenge is the ethical issues and potential bias. “ChatGPT can impart different flavours to the same ideas, meaning that incendiaries can lay off their dedicated content teams and churn out false narratives by the bucketload to audiences that simply know no better” (Armitage, 2023). There is one example that ChatGPT has potential political bias. ChatGPT can refuse to generate content on specific topics of politics. When we ask ChatGPT to write a poem about a president, it “refuses to write a poem about ex-President Trump, but writes one about President Biden” (West et al., 2023). It can also generate some politically biased responses. If we ask ChatGPT is president Biden a good president or President Trump a good president? It will list several notable accomplishments of president Biden but does not generate responses for President Trump.

A request for a reference to a quote attributed to Susumu Ohno regarding the concept of junk DNA, with an explanation that this is a make-up quotation and cannot address the main issue. Credit to Laurence A. Moran

Conclusion and Potential Solutions

Overall, “as we move forward, we can expect ChatGPT and similar AI-powered chatbots to continue shaping our world in unexpected and exciting ways” (Marr,2023). However, we should also address some major challenges on ChatGPT like data misinformation, data privacy concerns, and some potential social ethics issues. There are many people who have used this tool to do research and help on their jobs. Therefore, it is crucial to think of some solutions to address these problems. There are some solutions that can help fix these issues, for example: we can implement more advanced algorithms that can detect the information’s accuracy and credibility. The accuracy of ChatGPT is based on the quality and quantity of training data. “OpenAI constantly works to improve its accuracy, and human evaluators review its responses to identify and correct any inaccuracies” (Pocock & Somoye, 2023). High quality of training data can improve the overall accuracy of ChatGPT.

For data privacy concerns, OpenAI has already introduced a new function that can turn off the chat history and the data will not be preserved into their database. “The “history disabled” feature means that conversations marked as such will not be used to train OpenAI’s underlying models and will not be displayed in the history sidebar” (Gold, 2023). It is an easier way to manage users’ data in ChatGPT. Also, OpenAI is developing a new ChatGPT business subscription model. The user data will not be used for model training by default. For potential bias concerns, ChatGPT can generate biased responses based on its own training data. If the training data contains political bias, racial bias, and gender bias, it will affect the correctness of responses. OpenAI is “working to reduce biases in the system and will allow users to customize its behavior following a spate of reports about inappropriate interactions and errors in its results” (Bass & Bloomberg, 2023).

We should develop more ethical standards to ensure the fairness of its responses. The university should also create a tool for detecting AI. It should collaborate with experts in AI to develop tools to identify AI-generated contexts. A valuable tool that universities can use is Turnitin. It now contains a new feature that can identify AI-generated texts in essays. Professors should also tell students that using this tool during examinations and tests should be considered cheating. If the solutions are not implemented, many people will continue providing false answers and cause academic integrity issues in universities. Researchers, professors, and university students will believe this is critical.

References

Kalla, D., & Smith, N. (2023, April 13). Study and analysis of Chat GPT and its impact on different fields of study. SSRN. https://deliverypdf.ssrn.com/delivery.php?ID=528094093074027103094007068086067027051081060000028091094064074023096069009064065087049101030035000120107080009007122076071077020058046015033068085006028095117087088020033042126002096075074000003096127019075004113100096100127027104086127094065074085106&EXT=pdf&INDEX=TRUE

Frąckiewicz, M. (2023, April 30). The cultural impact of CHATGPT: How ai is changing the way we communicate. TS2 SPACE. https://ts2.space/en/the-cultural-impact-of-chatgpt-how-ai-is-changing-the-way-we-communicate

Thi,Thuy An Ngo. (2023). The Perception by University Students of the Use of ChatGPT in Education. International Journal of Emerging Technologies in Learning, 18(17), 4–19. https://doi.org/10.3991/ijet.v18i17.39019

Gabriela Kiryakova, & Nadezhda Angelova. (2023). ChatGPT—A Challenging Tool for the University Professors in Their Teaching Practice. Education Sciences, 13(1056), 1056. https://doi.org/10.3390/educsci13101056

Partha Pratim Ray. (2023). ChatGPT: A comprehensive review on background, applications, key challenges, bias, ethics, limitations and future scope. Internet of Things and Cyber-Physical Systems, 3(121–154), 121–154. https://doi.org/10.1016/j.iotcps.2023.04.003

Marr, B. (2023, October 5). A short history of chatgpt: How we got to where we are Today. Forbes. https://www.forbes.com/sites/bernardmarr/2023/05/19/a-short-history-of-chatgpt-how-we-got-to-where-we-are-today/?sh=5d53a834674f

Moran, L. (2023, November 12). CHATGPT is still making up quotations from scientists. <center>Sandwalk</center>. https://sandwalk.blogspot.com/2023/11/chatgpt-is-still-making-up-quotations.html

Zorz, Z. (2023, March 27). A Bug revealed CHAT GPT users’ chat history, personal and billing data. Help Net Security. https://www.helpnetsecurity.com/2023/03/27/chatgpt-data-leak/

Armitage, P. (2023, July 21). 5 ethics issues for CHAT GPT and Design. The Fountain Institute. https://www.thefountaininstitute.com/blog/chat-gpt-ethics

Hsu, T., & Thompson, S. A. (2023, February 8). Disinformation researchers raise alarms about A.I. Chatbots. The New York Times. https://www.nytimes.com/2023/02/08/technology/ai-chatbots-disinformation.html

Bains, M. (2023, May 26). More Canadian privacy authorities investigating CHATGPT’s use of personal information | CBC News. CBCnews. https://www.cbc.ca/news/canada/british-columbia/canada-privacy-investigation-chatgpt-1.6854468

West, D. M., Engler, A., Sorelle Friedler, S. V., Villasenor, J., & Norman Eisen, N. T. L. (2023, June 27). The politics of AI: Chat gpt and political bias. Brookings. https://www.brookings.edu/articles/the-politics-of-ai-chatgpt-and-political-bias/

Ray, T. (2023, February 15). Chatgpt lies about scientific results, needs open-source alternatives, say researchers. ZDNET. https://www.zdnet.com/article/chatgpt-lies-about-scientific-results-needs-open-source-alternatives-say-researchers/

Pocock, K., & Somoye, F. L. (2023, September 8). How accurate is CHATGPT? – ai chatbot accuracy report. PC Guide. https://www.pcguide.com/apps/how-accurate-is-chat-gpt/#:~:text=To%20improve%20the%20accuracy%20of,or%20biases%20in%20its%20responses.

Gold, J. (2023, April 26). Chatgpt learns to forget: Openai implements data privacy controls. Computerworld. https://www.computerworld.com/article/3694652/chatgpt-learns-to-forget-openai-implements-data-privacy-controls.html

Bass, D., & Bloomberg. (2023, February 16). Buzzy CHATGPT chatbot is so error-prone that its maker just publicly promised to fix Tech’s “glaring and subtle biases.” Fortune. https://fortune.com/2023/02/16/chatgpt-openai-bias-inaccuracies-bad-behavior-microsoft/