Lexy Atterton

Lexy Atterton is currently in her last semester at Capilano University. She is excited to complete her undergraduate degree majoring in Psychology, with a concentration in Applied Psychology. Lexy has always thrived working with children and youth. Moving forward she hopes to pursue a career where she can continue helping youth and making a difference in their lives, using skills learned from her time at Cap U.

In a time where social media is becoming even more integrated into our everyday life, there are both positive and negative effects that come with it. Throughout the last ten years the prevalence and popularity of online family-based content has increased. This content often centers around the parents’ children and their everyday lives. While this content may seem fun and harmless to most, the reality can be much darker. Family-centered content can be exploitative for many reasons. Creators will post footage of their children’s mental breakdowns, arguments, and more. The children are not able to consent to this content that may have detrimental impacts to their wellbeing as they get older. The psychological effects of children’s online stardom can be compared to the outcomes seen in child actors.

Unlike child actors, there are currently no laws in place protecting children who work in social media. Parents can have their children act in front of a camera as much as they please with no limits on how long they work for. There are also no laws that state a portion of the income made off this content has to go to the children. These factors can turn a seemingly innocent practice into an exploitative business. Many family YouTube channels and Tik Tok accounts have been under fire for the abuse and exploitation of their children. Examples include social media accounts such as “Fantastic Adventures”, “The Stauffer Life”, and “DaddyOFive”. As social media entertainment becomes more prevalent, the dangers that come with it for children will also increase.

Youtube is home to hundreds of monetized family channels.

I first came across this topic during Covid lockdowns when I saw a news article detailing a family vlog channel’s exploitation of their children. From there I watched hours of videos detailing other examples and read countless articles discussing the matter. As the years have gone by I have only become more passionate about this subject as more examples of abuse and exploitation have come out. As a person who has worked with children for years, I care deeply for them and feel a personal pull towards this subject. Parents should be the ones protecting children from exploitation, not the ones allowing it to happen to them or exploiting them themselves. The children posted on these channels or accounts are too young to consent and deserve protection. Far too many parents do not understand the dangers that come with posting their children online. In North America, approximately eight in ten parents share their children, or information about their children, on social media (Edney, 2022). Even parents who post what they think of as “cute” or “harmless” videos and pictures of their children on public accounts allow online predators to have easy access to their children. Advancements in technology include advancements in porn called “deep fake porn”. Online predators now have the ability to take images of children and create realistic child porn from such images. In my own life I have seen my family members post pictures of their kids online not knowing the dangers that come with it. For these reasons I feel a deep, personal need to bring light to this topic and advocate for children online.

The fame and success parents can gain from posting their children online can come at the cost of their child’s wellbeing. Part of what makes family content so exploitative is oversharing, also known as “sharenting”. “Sharenting” describes the way some parents use social media to reveal information about their children (Edney, 2022). About 74% of parents know of another parent who has overshared information about their child online (Edney, 2022). Sharenting can have an initial seemingly positive impact as many parents have found community with other families through sharing their children online (Edney, 2022). The negative impacts should overshadow the positive ones, as the possible long-term effects on children are extremely concerning.

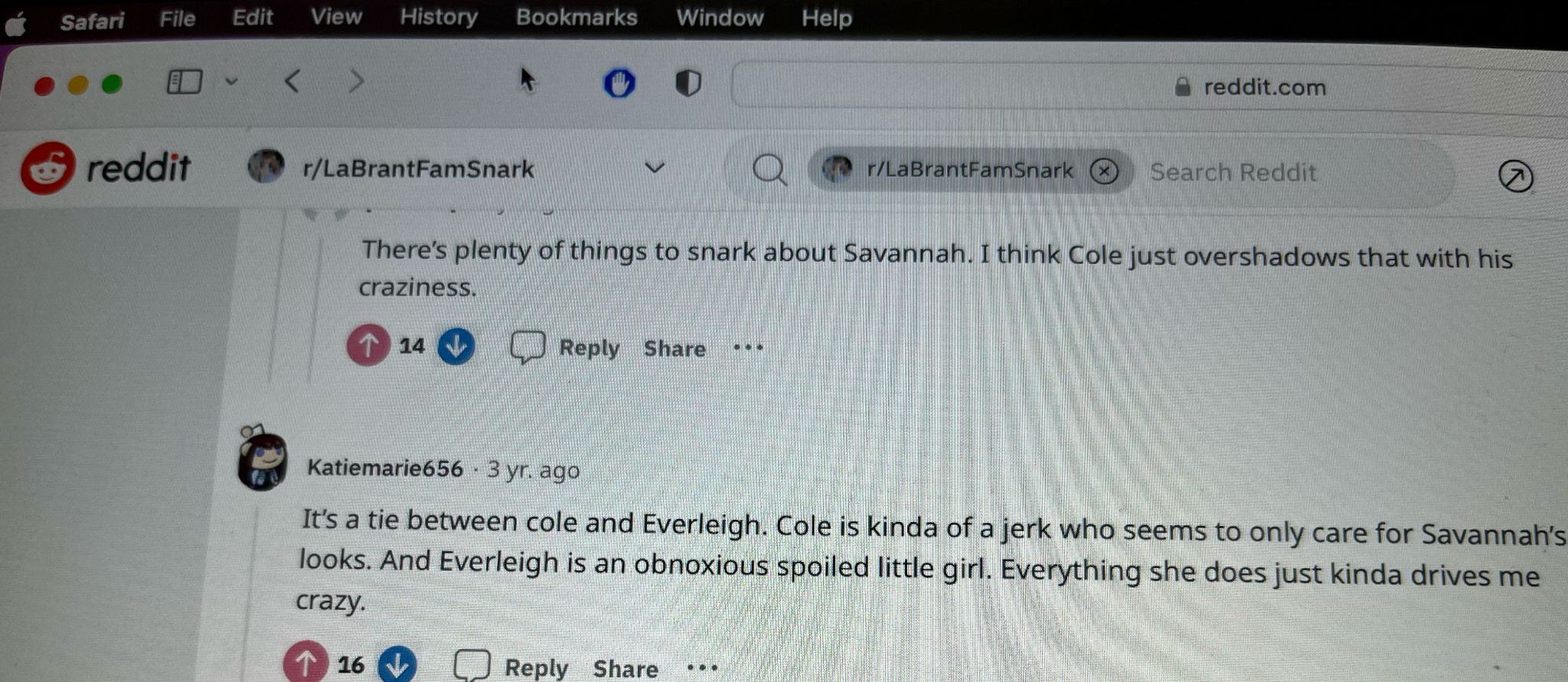

While this is a fairly new topic of concern, research has shown possible negative outcomes associated with children online. Studies have found that child social media stars are more likely to experience higher rates of depression, anxiety, and substance abuse as they get older (Edwards, 2023). This may be partially due to the way their self-esteem has been linked to public opinions, such as comments about them in forums or on their videos (Edwards, 2023). Forums such as Reddit or Guru Gossip have thousands of users who share their positive and negative opinions on influencers’ looks, personalities, and life. This gives children the unique ability to read content centered around themselves and their family (C. Thorpe, personal communication, March 3, 2023). Comments about children’s looks are particularly common and can lead to issues about one’s body image including body dysmorphia or an eating disorder (Edwards, 2023). Photos, videos, and other information about children can also be found by other children their age (Edney, 2022). This means that children can experience bullying both in their real lives at school as well as online from strangers (Edney, 2022). Some child influencers even have their own social media accounts meaning that anyone can message them directly (Edwards, 2023). Furthermore, sharenting can also impact a child’s digital footprint (Edney, 2022). A future employer could take issue with content that the child participated in, or behaviour associated with them (Edney, 2022). Children could essentially be punished in the future for content they could not even consent to being posted.

Just a few of the mean comments a child can find about themselves on Reddit.

Sharenting also makes child influencers more susceptible to online stalkers and predators (Edwards, 2023). For example, in 2021 a fifteen-year-old Tik Toker was harassed and stalked by an 18-year-old man (Edwards, 2023). The man sent crude messages and was eventually blocked after asking the young girl to send explicit photos of herself (Edwards, 2023). The man retaliated by travelling from his home in Maryland to the Tik Tokers home in Florida, armed with a shotgun (Edwards, 2023). He shot through the girl’s front door only for his weapon to jam, allowing the girl’s father the chance to shoot him to death in self-defense (Edwards, 2023). Parents can put their own children at risk by sharing pictures and videos of where they live. Even though they may not share their address, online stalkers and “fans” have been shown to find social media stars’ houses just through pictures of the exterior found in photos or videos. While stalking is a concern, sharenting also makes children more vulnerable to online sexual predators (Edney, 2022). For example, a mother on Tik Tok gained millions of followers by posting videos of her daughter trying new foods. People soon brought to her attention that pictures and links to videos of her daughter were being posted to pedophilia forums. The mother responded saying people were wrong for shaming her for sharing her daughter. She defended herself stating that she would change the settings on videos of her daughter so that people could not share or download them. With today’s technology and the ability to screen record, these measures will not prevent much. To this day if you click on her videos, you can find disturbing comments sexualizing the three-year-old girl. Even though children are “not doing anything sexual . . . they’re being sexualized by predators online” (C. Thorpe, personal communication, March 3, 2023).

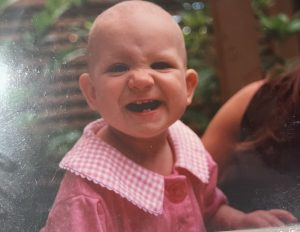

An example of a picture a parent might think is cute but a child may be uncomfortable with being online as they get older.

Even more concerning is the ability to create deep fake pornography. A study found that in 2018 one in five police officers reported encountering deep fake child pornography during child sexual abuse investigations (Eelmaa, 2022). Deepfake technology allows predators to create realistic pornographic content out of any photo or video they please (Eelmaa, 2022). This technology can appear so realistic that many would not be able to tell that it is fake if they are not told otherwise. Forums on the dark web have been created where users can request deepfake content by sending videos or pictures of children they have found online (Eelmaa, 2022). This creates new and unique threats to children and their safety online (Eelmaa, 2022). This content also makes it more difficult for law enforcement to identify what children are being physically abused and assaulted and which ones have been edited into the content (Eelmaa, 2022). This disturbing technology serves as an unsettling reminder that children are not safe being posted online and should be protected by their parents, not exploited by them.

Examples of abuse and exploitation can sadly be seen in many popular social media accounts. A monetized social media account called “DaddyOFive” posted family prank videos many found to be problematic (Edney, 2022). The account was run by the children’s father Michael, and two of the children’s stepmother, Heather (Edney, 2022). The popular account gained negative attention after a video went viral where the parents “pranked” their children by pouring fake ink on the floor and blaming them (Belcher, 2020). The couple filmed as they screamed and swore at their children until they cried insisting that they didn’t do it (Belcher, 2020). The channel had dozens of other videos “pranking” their children to the point of distress (Edney, 2022). This particular video got the attention of the children’s biological mother as well as child protective services (Belcher, 2020). Michael and Heather lost custody of two children, who were placed into their biological mother’s home (Belcher, 2020). The two were sentenced to five years of probation and were forbidden to create or post content featuring their other children (Edney, 2022). The couple ignored this and soon began uploading family content to a second channel until YouTube banned their accounts (Edney, 2022).

A YouTube account called “The Stauffer Life” faced backlash in 2020 when they announced they would be giving up their five-year-old adopted child and placing him with another family (Edney, 2022). The family had first started documenting the child’s adoption in 2016 on their monetized YouTube channel, sharing the process with their almost one million subscribers (Elliott, 2020). Although many adoption agencies and experts suggest that parents do not share information about their child before the adoption becomes official, The Stauffer’s ignored this (Edney, 2022). The parents even created a campaign to raise money to ease adoption expenses (Edney, 2022). They did so by creating a digital puzzle in which a piece would be removed every time a subscriber donated five dollars (Edney, 2022). The puzzle would eventually reveal a picture of their adopted son (Edney, 2022). The family created a total of twenty-seven, now deleted, videos of their adoption “journey” (Edney, 2022). During the adoption process the agency and a doctor discovered the child had a brain injury and would need extensive care (Edney, 2022). The couple decided to go through with the adoption even though they were previously opposed to taking on a child with a disability and were cautioned against it by the child’s doctor (Edney, 2022). The couple officially adopted their son from China in 2017 and posted a video of the big day which garnered over five million views (Edney, 2022). The choice to adopt a Chinese child was already a touchy subject as many white families can develop a “savior complex” when it comes to adopting children of colour. While transnational adoption can be seen as a positive humanitarian act, many POC adoptees feel differently (Koo, 2019). A study by Koo (2019) found that among a sample of Korean adoptee’s brought up by white families, almost all participants felt confusion and uncertainty about their sense of belonging. Unfortunately, many parents who adopt such children fail to integrate their culture, leaving them with a sense that their origins have been erased (Koo, 2019). Confusion in navigating their identity can lead to heightened mental health problems and feelings of instability later in life (Koo, 2019).

After adoption Myka Stauffer shared that she was “heartbroken” to learn that her adopted child was diagnosed with autism (Elliott, 2020). Despite this diagnosis, the couple continued to share content including information about the child such as their struggles with food insecurity, medical history, developmental challenges, and emotional meltdowns (Edney, 2022). The couple posted concerning content such as videos with his hands duct-taped to prevent thumb sucking and footage of him having a meltdown while his mother followed him with the camera asking if he was “done fitting” yet (Edney, 2022). Throughout the child’s 3 years in their home, the Stauffer’s shared content of him including sponsored videos and Instagram posts (Edney, 2022). The family eventually stopped including him in content once they made the decision to rehome him (Elliott, 2020). When explaining to the public why they made this decision they stated that the child had more “issues” than they had anticipated, which included conflict between him and their other four biological children (Elliott, 2020). The Stauffer’s ironically stated that they would no longer be sharing information about the child to “protect his privacy”, after years of profiting off content centered around him (Elliott, 2020). Despite taking down videos that included the child, reuploaded content can still be found all over the internet. One day this child could grow up and see this harmful footage and information. The internet is forever, and its effects can be detrimental, especially for children like him. One thing he will never see is any of the money made off the content he was featured in (Edney, 2022).

One of the most disturbing examples yet is a YouTube channel called “Fantastic Adventures”, run by a single mother named Michelle Hobson. The mother uploaded content of her seven adopted children, gaining almost 800,000 subscribers and millions of views (Mettler, 2019). Michelle was ecstatic about the amount of money flooding in and even pulled her children out of school so that they could make more content (Edney, 2022). In 2019 the dark truth of “Fantastic Adventures” was revealed when Michelle was arrested for molestation and abuse after her biological nineteen-year-old daughter reported her to the police (Edney, 2022). Michelle punished her children aged six to fifteen by withholding food and water for days, pepper spraying them, forcing them to take ice baths, beating them with belts and hangers, forbidding them from using the bathroom, and locking them in small closets (Mettler, 2019; Edney, 2022). Many of these “punishments” were dealt out when her children forgot their lines or refused to participate in content creation (Edney, 2022). The police also reported that at least one of her children experienced molestation (Edney, 2022). When investigators found the children they were covered in burn marks, scars, bruises, and appeared to be extremely malnourished (Crenshaw, 2021). Michelle passed away before she could stand trial (Edney, 2022). In this scenario her assets were divided amongst all of her children, an outcome most child social media stars never see (Edney, 2022). Although they were somewhat financially compensated these children will face a lifetime of psychological trauma due to the immense abuse (Crenshaw, 2021). Even more upsetting is the fact that authorities were notified of possible abuse over ten times since 2013 yet claimed the allegations were “unsubstantiated” (Crenshaw, 2021). These children were let down by their mother, law enforcement, and social media platforms.

The above examples highlight the need for laws protecting child social media stars. Similar laws to those that have been put in place for child actors could help protect children on social media. Most provinces in Canada, and states in the U.S., have laws and regulations in place to protect children in the entertainment industry (Edney, 2022). One of the most well-known laws is one in California called the “Coogan Act”. The Coogan Act was named after and put into place due to the harrowing experience of child actor Jackie Coogan (Edney, 2022). Coogan gained stardom after appearing in a silent film with Charlie Chapman. The child star went on to perform in many other popular roles and earned an estimated four million dollars by the time he turned eighteen (Lopez, 2014). He was shocked to find out that his parents had spent most of his earnings, stating they had the right to spend that money however they wanted to (Lopez, 2014). His mother was quoted stating “No promises were ever made to give Jackie anything. Every dollar a kid earns before he is twenty-one belongs to his parents.” (Lopez, 2014). Jackie sued his parents but after legal fees only walked away with about $125,000 (Edney, 2022). The case garnered attention from both the media and the California government. In 1939 California officially put the Coogan Law into effect and revised it throughout the years to offer even more protection (Edney, 2022). A key component of the law is that 15% of a child’s earnings must be set aside into a blocked trust account that both the child and parents cannot access (Edney, 2022). Once the child is 18, they are able to access the trust. The act also includes rules about how many consecutive days or hours a child can work. British Columbia has similar laws in place. BC entertainment laws state that if a child earns more than $2,000 for a project, 25% of their earnings must be put into a Public Guardian and Trustee account (Government of British Columbia., n.d.). Like a Coogan account, the PGT account cannot be accessed by the child’s parents and is not released to the child until they turn 19 (PGT, 2021). Money from the PGT can only be released before this to help pay the child’s medical expenses or pay for their education (PGT, 2021). In this case a Guardianship and Trust Officer will work with the child and parent (PGT, 2021). The officer must ensure the withdrawal of funds is in the best interest of the child before withdrawal is approved (PGT, 2021).

Blurring or covering a child’s face with an emoji can be a good way to share a photo or video while still protecting the child’s privacy.

While these laws may not be perfect, they at least ensure the child is somewhat protected. Although these laws are meant to protect children in the entertainment industry, none of these laws apply to children in monetized social media content. Some social media platforms have attempted to rectify this by changing their guidelines, but few are effective. YouTube has stated that they will reduce monetization on channels featuring children that encourage “negative behaviour” or include substantial amounts of commercial or promotional content (YouTube, 2023). Parents can easily bypass this by keeping their promotional content on other platforms such as Instagram. YouTube has also been known to be incredibly inconsistent in enforcing their guidelines. Instagram’s guidelines state that children under 16 will have their accounts default to private (Bond, 2021). Additionally, the platform will block adults from interacting with children on the app if they have been reported or blocked by other young users (Bond, 2021). Although Instagram does not allow children under thirteen to create an account (Bond, 2021), it is easy to bypass this system by lying about one’s age upon registration. TikTok (n.d.) has a similar policy in which children between thirteen and fifteen have their accounts default to private. TikTok (n.d.) also has an age restriction of 13 but one can easily lie about their age. Parents can bypass all these rules by posting their child centered content to their own account or putting “account run by a parent” in the bio (C. Thorpe, personal communication, March 3, 2023). None of these guidelines explicitly protect children who are featured in family content.

Parents can share a sweet moment with their child by taking a photo where identifying features are not shown.

Currently, there are no laws in place protecting children who are put on the internet by their parents for the sake of content and money. The government and social media accounts are failing a generation of children by not creating laws to protect their wellbeing. While some may think social media content isn’t taxing, parents often feel pressure to constantly put out content in order to gain more brand deals and maintain relevance. Children featured in popular social media content must deal with loss of privacy, missing school, and the pressure of growing up in the public eye (Edwards, 2023). Being filmed all day can be extremely confusing for children as they cannot decipher what is for content and what is real life. That said, sharing children on social media isn’t always bad. It can be a wonderful way for many parents to share their child with loved ones and family members that live abroad. Many parents exist who are aware of the dangers of social media. These parents are conscious of their child’s presence online and the impact it may have on them in the future (Edney, 2022). One way to protect your child is by blurring their face or placing an emoji over it. Another way is to keep your social media accounts private and only sharable with a select group of trusted people. At the end of the day sharing your child publicly online for your own personal gain is rarely ever worth the consequences.

References

Belcher, S. (2020, May 6). DaddyOFive’s Mike and Heather Martin Were Driven off the Internet

After Child Abuse Claims. Distractify. https://www.distractify.com/p/daddyofive-now.

Bond, S. (2021, July 27). Instagram Debuts New Safety Settings For Teenagers. NPR.

https://www.npr.org/2021/07/27/1020753541/instagram-debuts-new-safety-settings-for-teenagers

Crenshaw, Z. (2021, May 14). ‘A failure of the system’: Kids told Dcs and police about prior

‘YouTube Mom’ abuse. ABC 15 Arizona.

https://www.abc15.com/news/region-central-southern-az/maricopa/a-failure-of-the-system-kids-told-dcs-and-police-about-prior-youtube-mom-abuse.

Edney, A. (2022). “I Don’t Work for Free”: The Unpaid Labor of Child Social Media Stars.

University of Florida Journal of Law & Public Policy, 32(3), 547–572.

Edwards, M. (2023) Children Are Making It Big (For Everyone Else): The Need For Child

Labor Laws Protecting Child Influencers. SSRN Electronic Journal.

http://doi.org/10.2139/ssrn.4351827

Eelmaa, S. (2022). Sexualization of Children in Deepfakes and Hentai. TRAMES: A Journal of

the Humanities & Social Sciences, 26(2), 229–248. https://doi.org./10.3176/tr.2022.2.07

Elliott, J. K. (2020, May 28). YouTube mom Myka Stauffer says she gave up adopted son with

autism. Global News.

https://globalnews.ca/news/6997451/myka-stauffer-adoption-autism-youtube/.

Government of British Columbia. (n.d.). Children in Recorded Entertainment Industry:

Regulation Part 7.1, Divison 2

Koo, Y. (2019). “We Deserve to Be Here”: The Development of Adoption Critiques by

Transnational Korean Adoptees in Denmark. Anthropology Matters Journal, 91(1),

35–71.

Lopez, D. (2014, April 2). 7 Celebs Whose Parents Decimated Their Fortunes. Insider. Retrieved

From https://www.businessinsider.com/7-celebs-whose-parents-decimated-their-fortunes

-2014-4.

Mettler, K. (2019, November 13). This ‘YouTube Mom’ was accused of torturing the

Show’s stars: her own kids. She died before standing trial. The Washington Post.

https://www.washingtonpost.com/crime-law/2019/11/13/popular-youtube-mom-who-was-charged-with-child-abuse-has-died/.

Public Guardian and Trustee of British Columbia (2021) Child and Youth Services: Trust

Services.

https://www.trustee.bc.ca/services/child-and-youth-services/Pages/trust-services.aspx

TikTok. (n.d.). Guardian’s Guide. https://www.tiktok.com/safety/en/guardians-guide/

YouTube Help. (2023). YouTube channel monetization policies.

https://support.google.com/youtube/answer/1311392#kids-quality&zippy=%2Cquality-principles-for-kids-and-family-content