Cole Davies

Cole Davies is a student in the psychology program at Capilano University. Recently he has become very interested in the subject of computer science and after graduating with a bachelor’s degree in psychology is looking to pursue a degree in computer science.

“It” had been unleashed onto the general population for two months when I first heard a teacher proclaim, “The essay as we know it is dead.” Now, maybe they were just being hyperbolic (as English teachers are known to be), but maybe they had a point. A few days later I heard about it on the news, this time talking about how “it” has the capacity to take an estimated 300,000,000 jobs away from people. Suddenly, everyone was talking about “it”: teachers, news anchors, “it” was even just banned in Italy. A UBS analyst had this to say regarding the popularity of it: “in 20 years following the internet space, we cannot recall a faster ramp in a consumer internet app” (Chow, 2023). The “it” is Artificial intelligence, and it truly is everywhere. What is really important however, is knowing what drives it, and taking the steps to understand this technology as a whole. In this article I aim to investigate the mechanisms of AI, deep learning and how deep learning relates to artificial intelligence and assess the potential impact that this technology could have on our society as a whole.

Since the very inception of computing and the development of what have now become computers, the concept of machines acting as intelligent beings/rational actors has been an extremely important one. In 1944 Warren McCullough and Walter Pitts conceived of an idea related to cognition and computer systems. Using biology as a starting point they used a neuron in the human brain develop an “intelligent machine.” Computer science was a brand-new field and was a very hot topic during this time. McCullough and Pitts first theorized a model of interconnected circuits that could be used to stimulate intelligent behaviour. In the following years, they spent time trying to incorporate this idea into machines with little success. It wasn’t until 1954 that they achieved this goal. These connected circuits contained nodes which functioned similarly to neurons each taking an input and output with an associated weight based on the input that said node was receiving. Because the blueprint for this technology was based on our understanding of neurons and the human brain at the time, the term neural network was coined, and used when referring to this technology (NNETs for short). Frank Rosenblatt (1958) created the “perception model” which was a large step in the development of pattern recognition. Around the same time in 1950, Alan Turing (the progenitor of computer science) published a paper titled “Can Machines Think?” which attempted to look at the concept of machines displaying some sort of intelligent thought. As tech started to develop so too did the concept of artificial intelligence. By 1990 many of the landmark goals in artificial intelligence as we know it had been achieved. World renowned chess player Gary Kasparov was defeated by IBM’s Deep Blue a computer program specifically designed to play chess. Another huge leap forward happened in 1997 when the long short-term memory model was created and developed (LSTM for short). LSTM networks allow information to persist within them by using a looping structure (which allows for information to be passed from one stage of the network to another). This development set the stage for one of the biggest advancements in the field of artificial intelligence. In 2014, the concept of GANs (generative adversarial networks) were introduced in a paper by Ian Goodfellow and his associates in Montreal. GANs and NNETs are what allows for most AI (such as, ChatGPT, Deepfakes, and even services that check your spelling and whether or not you plagiarised your work) to work in the way that they do. Because GANs and NNETs are so crucial to the fabric of AI, it is important to have a basic idea of how exactly they work, and what they do (The history of Artificial Intelligence, 2020).

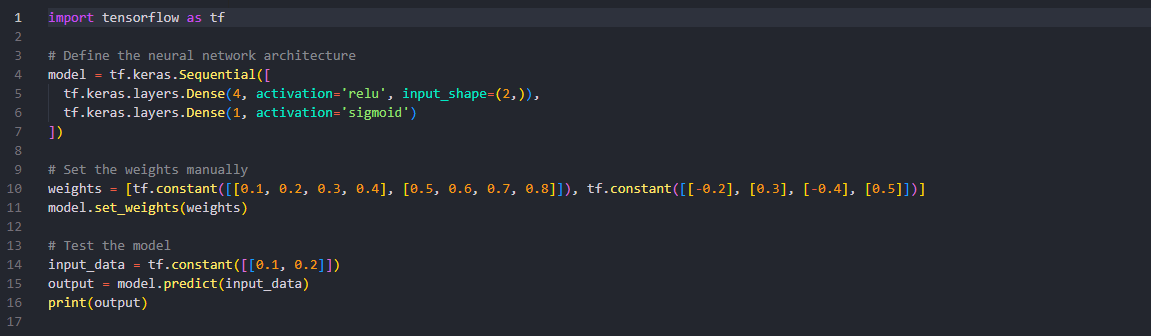

A basic implementation of a neural network depicted using the python programming language.

NNETs are one of the ways in which deep learning is conducted. Deep learning refers to the idea that a computer learns how to do a task by analysing and learning from “training data.” Most modern NNETs consist of millions of nodes connected to one another, organized within different groupings of layers. First is the input layer, which takes user input (if you have ever used ChatGPT, this would be the stage in which you ask it a question). Second is the hidden layer, where all of the mathematical calculations and background work gets done. Lastly, the output layer returns the result based on the integration of the input and hidden layers. It is important to note that data only flows one way within a NNET. You cannot start with an output and return an input. Each singular node in these groupings has with it an associated weight that is activated when an input is received. For example, it could be 0.24 for this particular node as the weights can only be between 0-1. This weight is then multiplied by the data item the node received and uses summation to add the product based on the number of incoming connections to that node. For example, say you had three different connections going into the node and the node received random inputs of four, five, and two. You would multiply four, five, and two by 0.24 (the weight of the node) and then add those three numbers together. This new number would be then checked against the threshold of the node and if it were below it, the node would not fire. If it were above the threshold however, the node would “fire” sending its output along all of the outgoing connections for that node. When initially training an NNET, all of the weights are set to a random value. Below is a very simple piece of code that is a barebones implementation of the ideas explained above.

Even without having any coding or programming knowledge, it is fairly straightforward to follow what is happening. First, the layers are defined and initialized (“sigmoid” refers to a sigmoid function which transforms any number in its domain into a number between 0-1). Second, the weights are set using constant numbers. Finally, the output layer is given to the user based on the input data. GANs are made up of two different NNETs in which they are pitted against each other (the adversarial part of the name: generative adversarial network). GANs primarily consists of two different parts, a generator, and a discriminator. The generator starts by producing examples that are extremely poor, which becomes a point of reference for the discriminator. The discriminator has an example of a realistic image of whatever is being compared, and it will consistently check if the output of the generator matches the example the discriminator has on hand. As the training progresses the generator will start to produce results that are able to fool the discriminator i.e., the generator’s output is now so lifelike that it cannot be distinguished from the real thing. This is what is known as deep learning. Interestingly, if you do not give the AI any training data, it will output an image of pure “noise” which is displayed below. This is, overall, a very basic idea of how AI works. There are many other facets to this topic such as backward propagation, and gradient descent, but those are far beyond the scope of this article.

An image of pure noise. This is what is generated when an AI does not have any material to train from.

Having discussed the underlying mechanisms of this technology, let us now look at the potential impact that AI can have on our day-to-day life by looking at some of the benefits that AI offers to us. First, I predict that AI will have a positive impact on the field of computer science. One of the first things I tested when ChatGPT was released to the general public in 2022 was the capacity for it to generate code; because these AI are so effective at coding, there is potential for the AI to handle the mundane coding tasks such as structure, importing modules correctly, proper syntax, etc. Since the actual coding part is secondary in computer science and the primary goal is problem solving and breaking down questions, this allows for programmers to focus on the more abstract ideas while saving time not having to worry about the grunt work. Companies have even started to sell coding assistants using AI as of late which shows this idea is already coming to fruition. Another benefit comes into play when considering the field of cyber security. By using the machine learning in the way that I just outlined above, we can train AI models to learn from cyber attacks, and thus train them to defend against them.

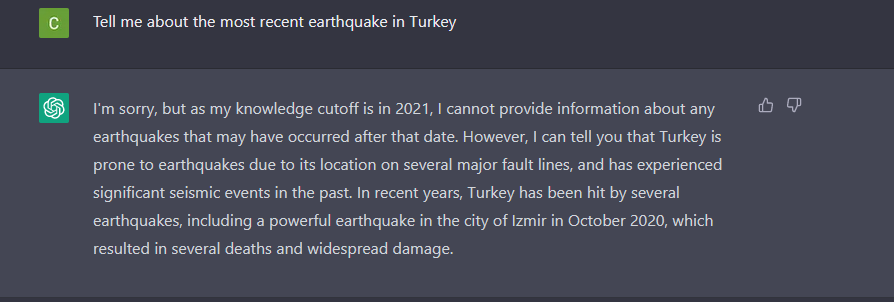

An image of a conversation I had with ChatGPT depicting its inability to provide information past 2021.

Looking outside the immediate scope of computer science, there are practical applications in other fields such as medicine. A research paper by Shaheen (2021) looked at how AI and the field of medicine interact with one another and found that “Artificial intelligence can not only aid in the development of useful pharmaceuticals, but also improve the efficacy of current ones after they have been developed. Although AI systems in healthcare are currently restricted, the medical and economical benefits are too great to overlook.” This technology is even applicable outside of the realms of science. Rao (2019) investigated how AI could be used to improve the field of construction and there were a couple of different possibilities: for one, preventing cost overrun. AI can be used to calculate the cost based on metrics such as size of the project, the type of contract it is under, as well as the competence of the managers overseeing the product. In addition to this, AI has the capacity to build better models based on their generative design. Ultimately, we are scratching the surface with the power this technology has and will continue to have. However, it is important to consider that this technology as a whole, is a mixed blessing.

The concerns over AI that have cropped up, from my perspective are extremely valid. The ways in which raw data can be altered by AI is truly astonishing. This brings us to the topic of deepfakes. The term deepfake is a portmanteau (a combination of two different parts of two separate words), of deep learning and fake. Deep learning as described above, has to do with the outputs of neural networks based on weights assigned to a large number of nodes interconnected to one another. However, the practical applications of this are that this technology can be used to generate an image, audio, or video that is not real. Dixon (2019) described deepfakes as “scarier then photoshop on steroids.” These are not shallow edits either mind you, but photos that look almost indistinguishable from reality due to the AI’s learning from real images/audio/video in training. Obviously, this is of great concern as there are numerous applications of this. The first (and probably most obvious) is how this could be applied within the context of non-consensual pornographic media. There have been many different reports of famous celebrities having their likeness used within pornographic media regardless of their actual participation, or consent. Secondly, is the capacity for this technology to spread misinformation and fake news. Even in our current climate, the internet is already rife with misinformation, and deep faking allows for this to be increased exponentially. A less obvious but still extremely important problem is how our current legal system deals with deep fakes. As this technology is brand new and is only just seeing more widespread practical applications, our laws are not equipped to deal with the nuances presented. For instance, if I made a deepfake of someone using images that were publicly available would that be considered illegal. Or if I disclosed the fact that the video/image/audio is fake from the get-go, what are the legal ramifications of doing so?

An image of my hand to compare with the hand generated by AI.

An AI generated image of a hand to showcase the difficulty this technology has with generating this particular thing.

As dark as it is, there are also questions to be raised in regard to this technology and child pornography. If people use this tech to generate heinous content such as child pornography without it actually existing, would the legal system be able to treat it as such? In a paper by Kulger & Pace (2021) they assessed the impact of deep fakes. They sorted the potential harm deepfakes can cause into two different categories: individual harms, to the subject’s dignity and emotional well being, and wider societal harms that have to do with national security and democratic institutions. Individual harms would encompass non-consensual pornography, and any harm stemming from the release of the video/picture or caused by the public’s perception of it. This should be cut and dried right? But it’s not! This is because deepfakes do not technically depict the subjects naked body but rather only their face. In addition to this, the subjects depicted did not actively engage in the sexual acts they are being portrayed as doing. These invasions of privacy can have numerous psychological effects on their victims, including depression, lack of appetite, and suicidal ideation. Furthermore, there is also potential for social backlash in the form of lack of job opportunities as well as an increased resistance when seeking employment. Earlier on, I mentioned that deepfake technology has the capacity to spread vast amounts of misinformation. This is where the second type of harm discussed by Kugler and Pace (2021) comes into effect. In 2018, a video of Barrack Obama was released showing him saying ridiculous things such as “Stay woke bitches” and “Ben Carson is in the sunken place”. Only at the end was it disclosed by that it was all faked. While there haven’t been any sophisticated disinformation campaigns (for instance to try and change the outcome of an election) using deep fake technology yet, it is only a matter of time before this becomes a reality.

Now, having just described all of the aforementioned positives and negatives, you might be under the impression that “we’re fucked.” And this would be a reasonable conclusion to reach based on what has been said thus far. However, this technology is not a perfect science, and I am going to spend the last portion of this paper discussing the limitations that currently exist with this technology. Considering the widespread use of this technology, the first thing that comes to mind is ChatGPT. The GPT stands for generative pretrained transformer (here we can see once again the theme of generation within the context of AI). This technology, while extremely popular, does have a number of issues. First, ChatGPT is a generative text-based AI meaning that it cannot generate images, video, or audio, and furthermore cannot in fact divulge information that would be considered harmful (how to make viruses for example). Another fault is that sometimes false information is given out (referred to as AI hallucinations). In the case of ChatGPT it also has an information cut-off off date of 2021, meaning that it cannot answer with any certainty about events that have occurred after this date. Below you’ll see a conversation I had with ChatGPT inquiring about the most recent earthquake in Turkey: As far as AI goes, text generation is much less complex as opposed to what exists within the realm of AI generated images, voice, and video, which has a whole host of other limitations attached to them. While deep fake images have become extremely realistic, to the point that it is not possible for the human eye to tell them apart from an actual image, there are some quirks in how all of this works. One quirk is the inability of AI to produce realistic looking hands and feet for human beings. Below is an image that I took of my own hand. Comparing this to one generated by AI, and the difference is immediately noticeable. There are a number of reasons as to why exactly this occurs. Very simply, hands are complex and require a number of things to make them look natural. Joints, accurate shading, lines in the hands, as well as accurate anatomy (such as humans having 5 fingers) is quite complicated for an AI to generate. It also means that because every human hand is different (shape, skin color, skin blemishes, etc.) the AI has a hard time in finding common points across this data item. Finally, the way in which we perceive hands poses a problem for AI, because they are so intricate, any sort of anomaly is immediately more noticeable than say a leg being slightly shorter. Looking at deep fakes, there are a number of limitations here as well (although these could potentially be positives depending on your view of deep fakes as a whole). DeepfaceLab is one of the premier tools used to create deepfakes. I would argue however, that it is not very accessible to the general public yet. Very high computer specs are required to run this software (at least a 3000 series graphics card) which is very expensive and not commonly owned by the average person. Second significant knowledge is required to operate this software. This is not easy software to use and requires a high degree of knowledge about photo editing etc. Ultimately, at least for now, deep fakes are still not very accessible (at least when compared to something like ChatGPT).

In conclusion, you have now learned about the history of AI development and the underlying mechanisms of NNETs and GANs, and further, the potential positive and negative impacts that this technology could offer us were explored. Finally, we explored the limitations that inherently exist within generative AI, as well as deep fakes. Ultimately, I cannot say what the future holds. We have reached a point of no return, in a sense with AI technology “It is essentially an arms race between people who are generating this content and people trying to detect it” (K. Dajani, personal communication, February 26, 2023). It’s uncertain where this technology will go in the future as all of this is extremely cutting edge. So much so, that by the time you read this paper, parts of it could already be outdated.

Bibliography

A beginner’s guide to generative AI. Pathmind. (n.d.). Retrieved April 5, 2023, from https://wiki.pathmind.com/generative-adversarial-network-gan

Brownle, J. (2019, June 17). Understanding LSTM networks. Understanding LSTM Networks — colah’s blog. Retrieved April 4, 2023, from https://colah.github.io/posts/2015-08-Understanding-LSTMs/

Calderon, Ricardo, “The Benefits of Artificial Intelligence in Cybersecurity” (2019). Economic Crime Forensics Capstones. 36.

https://digitalcommons.lasalle.edu/ecf_capstones/36

Chow, A. R. (2023, February 8). Why CHATGPT is the fastest growing web platform ever. Time. Retrieved April 19, 2023, from https://time.com/6253615/chatgpt-fastest-growing/

Google. (2022, July 18). Overview of gan structure | machine learning | google developers. Google. Retrieved April 6, 2023, from https://developers.google.com/machine-learning/gan/gan_structure

Hardesty, L. (2017, April 14). Explained: Neural networks. MIT News | Massachusetts Institute of Technology. Retrieved April 3, 2023, from https://news.mit.edu/2017/explained-neural-networks-deep-learning-0414

The history of Artificial Intelligence. Science in the News. (2020, April 23). Retrieved April 1, 2023, from https://sitn.hms.harvard.edu/flash/2017/history-artificial-intelligence/

Islam, A. (2023, March 21). A history of generative AI: From gan to GPT-4. MarkTechPost. Retrieved April 6, 2023, from https://www.marktechpost.com/2023/03/21/a-history-of-generative-ai-from-gan-to-gpt-4/

Kugler, M. B., & Pace, C. (2021). Deepfake Privacy: Attitudes and Regulation. Northwestern University Law Review, 116(3), 611–680.

Singh, H. (2021, March 12). Deep learning 101: Beginners Guide to Neural Network. Analytics Vidhya. Retrieved April 4, 2023, from https://www.analyticsvidhya.com/blog/2021/03/basics-of-neural-network/

What is LSTM – introduction to long short term memory (2023, March 4). Intellipaat Blog. Retrieved April 4, 2023, from https://intellipaat.com/blog/what-is-lstm/

Why can’t ai draw realistic human hands? Dataconomy. (2023, January 25). Retrieved April 8, 2023, from https://dataconomy.com/2023/01/how-to-fix-ai-drawing-hands-why-ai-art/

Yeasmin, S. (2019, May). Benefits of artificial intelligence in medicine. In 2019 2nd International Conference on Computer Applications & Information Security (ICCAIS) (pp. 1-6). IEEE.